I recently read an excellent cautionary tale (and with a romance to boot), David Walton’s Three Laws Lethal (2019). The subject of “artificial intelligence” or AI (it isn’t really intelligence, but that’s another story) is hot. To take only one rather specialized example, the Federal Communications Commission’s Consumer Advisory Committee last year carried out a brief survey of the roles of AI, both harmful and helpful, in dealing with robocalls and robotexts. So it seems like an appropriate moment to take a look at Walton’s insights.

Frankenstein and the Three Laws

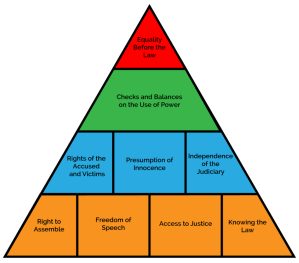

It’s well known that the early history of SF—starting with what’s considered by some to be the first modern SF story, Mary Shelley’s Frankenstein (1818)—is replete with tales of constructed creatures that turn on their creators and destroy them. Almost as well known is how Isaac Asimov, as he explains in the introduction to his anthology The Rest of the Robots (1964), “quickly grew tired of this dull hundred-times-told tale.” Like other tools, Asimov suggested, robots would be made with built-in safeguards. Knives have hilts, stairs have banisters, electric wiring is insulated. The safeguards Asimov devised, around 1942, gradually and through conversations with SF editor John W. Campbell, were his celebrated Three Laws of Robotics:

1—A robot may not injure a human being, or, through inaction, allow a human being to come to harm.

2—A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

3—A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

The “positronic” or “Asenion” robots in many of Asimov’s stories were thus unable to attack their human creators (First Law). They could not run wild and disobey orders (Second Law). Asimov’s robot could not become yet another Frankenstein’s monster.

That still left plenty of room for fascinating stories—many of them logic puzzles built around the Three Laws themselves. A number of the loopholes and ramifications of the Three Laws are described in the Wikipedia article linked above. It even turned out to be possible to use robots to commit murder, albeit unwittingly, as in Asimov’s novel The Naked Sun (1956). When he eventually integrated his robot stories with his Foundation series, he expanded the Three Laws construct considerably—but that’s beyond our scope here.

Autonomous Vehicles

To discuss Three Laws Lethal, I must of course issue a

Walton’s book does cite Asimov’s laws just before Chapter One, but his characters don’t start out by trying to create Asenion-type humanoid robots. They’re just trying to start a company to design and sell self-driving cars.

The book starts with a vignette in which a family riding in a “fully automated Mercedes” runs into an accident. To save the passengers from a falling tree, the car swerves out of the way, in the process hitting a motorcyclist in the next lane. The motorcyclist is killed. The resulting lawsuit by the cyclist’s wife turns up at various points in the story that follows.

Tyler Daniels and Brandon Kincannon are friends, contemporary Silicon Valley types trying to get funding for a startup. Computer genius Naomi Sumner comes up with a unique way to make their automated taxi service a success: she sets up a machine learning process by creating a virtual world with independent AIs that compete for resources to “live” on (ch. 2 and 5). She names them “Mikes” after a famous science-fictional self-aware computer. The Mikes win resources by driving real-world cars successfully. In a kind of natural selection, the Mikes that succeed in driving get more resources and live longer: the desired behavior is “reinforced.”

Things start to go wrong almost at once, though. The learning or reinforcement methods programmed into the AIs don’t include anything like the Three Laws. A human being who’s been testing the first set of autonomous (Mike-guided) vehicles by trying to crash into them is killed by the cars themselves—since they perceive that human being as a threat. Two competing fleets of self-guided vehicles see each other as adversaries and can be turned against their controllers’ enemies. The story is both convincing—the AI development method sounds quite plausible and up-to-the-minute (at least to this layman)—and unnerving.

But the hypothetical AI system in the novel, it seems to me, casts some light on an aspect of AI that is not highlighted in Three Laws-type stories.

Having a Goal

The Mikes in Three Laws Lethal are implicitly given a purpose by being set up to fight for survival. That purpose is survival itself. We recall that a robot’s survival is also included as the third of the Three Laws—but in that context survival is subordinated to protecting humans and obeying orders. Asimov’s robots are conceived as basically passive. They would resist being destroyed (unless given orders to the contrary), but they don’t take positive action to seek the preservation or extension of their own existence. The Mikes, however, like living beings, are motivated to affirmatively seek and maintain themselves.

If an AI population is given a goal of survival or expansion, then we’re all set up for Frankensteinian violations of the First Law. That’s what the book depicts, although in a far more sophisticated and thoughtful way than the old-style SF potboilers Asimov so disliked.

At one point in Walton’s story, Naomi decides to “change the objective. She didn’t want them to learn to drive anymore. She wanted them to learn to speak” (ch. 23, p. 248)—in order to show they are sapient. Changing the goal would change the behavior. As another character puts it later on, “[i]t’s not a matter of preventing them from doing that they want” (as if a Law of Robotics were constraining them from pursuing a purpose, like a commandment telling humans what not to do). Rather, “[w]e teach them what to want in the first place.” (ch. 27, p. 288)

Goals and Ethics

The Three Laws approach assumes that the robot or AI has been given a purpose—in Asimov’s conception, by being given orders—and the Laws set limits to the actions it can take in pursuing that purpose. If the Laws can be considered a set of ethical principles, then they correspond to what’s called “deontological” ethics, a set of rules that constrain how a being is allowed to act. What defines right action is based on these rules, rather than on consequences or outcomes. In the terms used by philosopher Immanuel Kant, the categorical imperative, the basic moral law, determines whether we can lawfully act in accordance with our inclinations. The inclinations, which are impulses directing us toward some goal or desired end, are taken for granted; restraining them is the job of ethics.

Some other forms of ethics focus primarily on the end to be achieved, rather than on the guardrails to be observed in getting there. The classic formulation is that of Aristotle: “Every art and every investigation, and similarly every action and pursuit, is considered to aim at some good.” (Nicomachean Ethics, I.i, 1094a1) Some forms of good-based or axiological ethics focus mostly on the results, as in utilitarianism; others focus more on the actions of the good or virtuous person. When Naomi, in Walton’s story, talks about changing the objective of the AI(s), she’s implicitly dealing with an axiological or good-based ethic.

As we’ve seen above, Asimov’s robots are essentially servants; they don’t have purposes of their own. There is a possible exception: the proviso in the First Law that a robot may not through inaction allow harm to come to humans does suggest an implicit purpose of protecting humans. In the original Three Laws stories, however, that proviso did not tend to stimulate the robots to affirmative action to protect or promote humans. Later on, Asimov did use something like this pro-human interest to expand the robot storyline and connect it with the Foundation stories. So my description of Three Laws robots as non-purposive is not absolutely precise. But it does, I think, capture something significant about the Asenion conception of AI.

Selecting a Purpose

There has been some discussion, factual and fictional, about an AI’s possible purposes. I see, for example, that there’s a Wikipedia page on “instrumental convergence,” which talks about the kinds of goals that might be given to an AI—and how an oversimplified goal might go wrong. A classic example is that of the “paperclip maximizer.” An AI whose only goal was to make as many paper clips as possible might end by turning the entire universe into paper clips, consistent with its sole purpose. In the process, it might decide, as the Wikipedia article notes, “that it would be much better if there were no humans because humans might decide to switch it off,” which would diminish the number of paper clips. (Apparently there’s actually a game built on this thought-experiment. Available at office-supply stores near you, no doubt . . .)

A widget-producing machine like the paperclip maximizer has a simple and concrete purpose. But the purpose need not be so mundane. Three Laws Lethal has one character instilling the goal of learning to speak, as noted above. A recent article by Lydia Denworth describes a real-life robot named Torso that’s being programmed to “pursue curiosity.” (Scientific American, Dec. 2024, at 64, 68)

It should be possible in principle to program multiple purposes into an AI. A robot might have the goal of producing paper clips, but also the goal of protecting human life, say. But it would then also be necessary to include in the program some way of balancing or prioritizing the goals, since they would often conflict or compete with each other. There’s precedent for this, too, in ethical theory, such as the traditional “principle of double effect” to evaluate actions that have both good and bad results.

Note that we’ve been speaking of goals give to or programmed into the AI by a human designer. Could an AI choose its own goals? The question that immediately arises is, how or by what criteria would the AI make that choice? That methodological or procedural question arises even before the more interesting and disturbing question of whether an AI might choose bad goals or good ones. There’s an analogy here to the uncertainty faced by parents in raising children: how does one (try to) ensure that the child will embrace the right ethics or value system? I seem to recall that David Brin has suggested somewhere that the best way to develop beneficent AIs is actually to give them a childhood of a sort, though I can’t recall the reference at the moment.

Conclusions, Highly Tentative

The above ruminations suggest that if we want AIs that behave ethically, it may be necessary to give them both purposes and rules. We want an autonomous vehicle that gets us to our destination speedily, but we want it to respect Asimov’s First Law about protecting humans in the process. The more we consider the problem, the more it seems that what we want for our AI offspring is something like a full-blown ethical system, more complex and nuanced than the Three Laws, more qualified and guarded than Naomi’s survival-seeking Mikes.

This is one of those cases where contemporary science is actually beginning to implement something science fiction has long discussed. (Just the other day, I read an article by Geoffrey Fowler (11/30/2024) about how Waymo robotaxis don’t always stop for a human at a crosswalk.) Clearly, it’s time to get serious about how we grapple with the problem Walton so admirably sets up in his book.